|

|

||

|---|---|---|

| img | ||

| gptel-curl.el | ||

| gptel-transient.el | ||

| gptel.el | ||

| LICENSE | ||

| README.org | ||

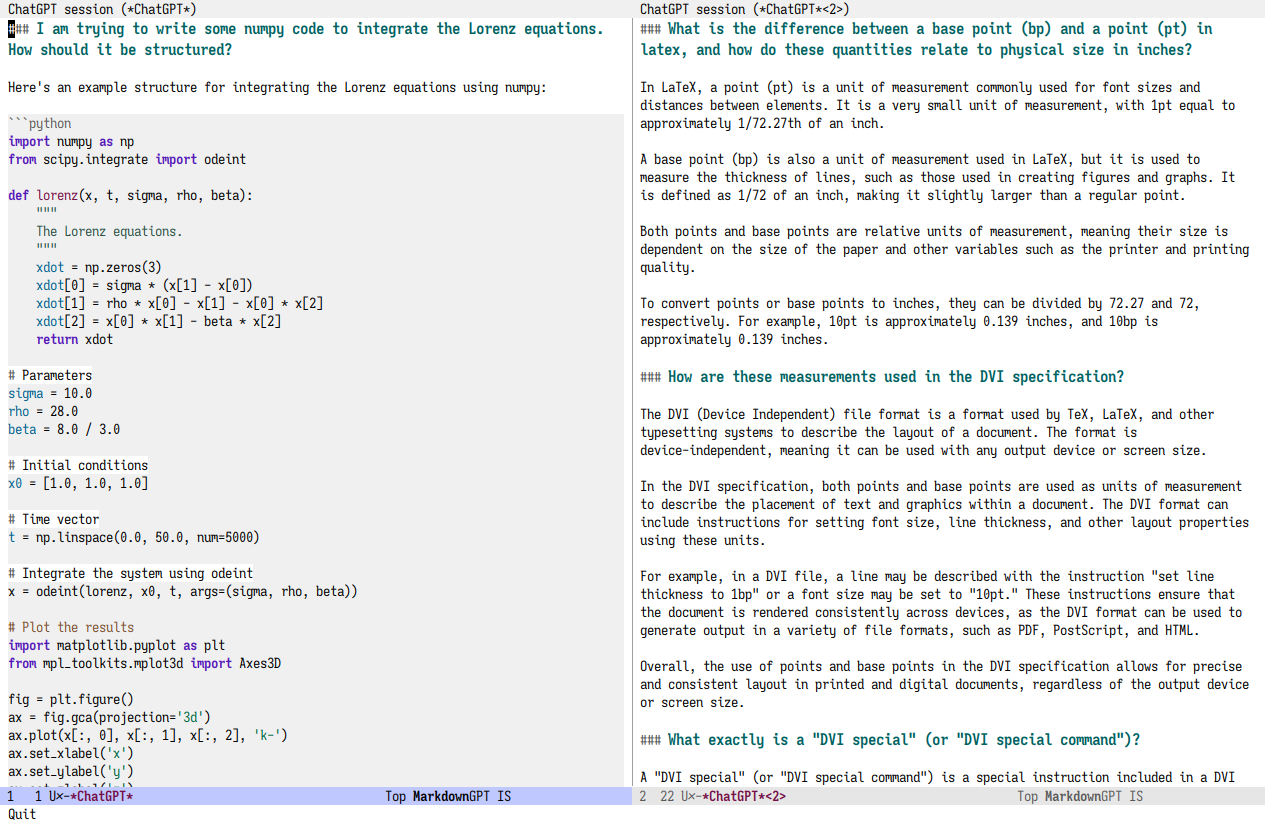

GPTel: A simple ChatGPT client for Emacs

GPTel is a simple, no-frills ChatGPT client for Emacs.

- Requires an OpenAI API key.

- No external dependencies, only Emacs. Also, it's async.

- Interact with ChatGPT from any buffer in Emacs.

- ChatGPT's responses are in Markdown or Org markup (configurable).

- Supports conversations (not just one-off queries) and multiple independent sessions.

- You can go back and edit your previous prompts, or even ChatGPT's previous responses when continuing a conversation. These will be fed back to ChatGPT.

Installation

GPTel is on MELPA. Install it with M-x package-install⏎ gptel.

(Optional: Install markdown-mode.)

Straight

(straight-use-package 'gptel)

Installing the markdown-mode package is optional.

Manual

Clone or download this repository and run M-x package-install-file⏎ on the repository directory.

Installing the markdown-mode package is optional.

Doom Emacs

In packages.el

(package! gptel)

In config.el

(use-package! gptel

:config

(setq! gptel-api-key "your key"))Spacemacs

After installation with M-x package-install⏎ gptel

- Add

gpteltodotspacemacs-additional-packages - Add

(require 'gptel)todotspacemacs/user-config

Usage

Procure an OpenAI API key.

Optional: Set gptel-api-key to the key or to a function that returns the key (more secure).

In a dedicated chat buffer:

- Run

M-x gptelto start or switch to the ChatGPT buffer. It will ask you for the key if you skipped the previous step. Run it with a prefix-arg (C-u M-x gptel) to start a new session. - In the gptel buffer, send your prompt with

M-x gptel-send, bound toC-c RET. - Set chat parameters (GPT model, directives etc) for the session by calling

gptel-sendwith a prefix argument (C-u C-c RET):

That's it. You can go back and edit previous prompts and responses if you want.

The default mode is markdown-mode if available, else text-mode. You can set gptel-default-mode to org-mode if desired.

In any buffer:

- Select a region of text and call

M-x gptel-send. The response will be inserted below your region. - You can select both the original prompt and the response and call

M-x gptel-sendagain to continue the conversation. - Call

M-x gptel-sendwith a prefix argument to set chat parameters (GPT model, directives etc) for this buffer, or to start a dedicated session from the selected region:

Why another ChatGPT client?

Existing Emacs clients don't reliably let me use it the simple way I can in the browser. They will get better, but I wanted something for now.

Also, AI-assisted work is a new way to use Emacs. It's not yet clear what the best Emacs interface to tools like it is.

- Should it be part of CAPF (

completions-at-point-functions)? - A dispatch menu from anywhere that can act on selected regions?

- A comint/shell-style REPL?

- One-off queries in the minibuffer (like

shell-command)? - A refactoring tool in code buffers?

- An

org-babelinterface?

Maybe all of these, I don't know yet. As a start, I wanted to replicate the web browser usage pattern so I can build from there – and don't need to switch to the browser every time. The code is fairly simple right now.

Will you add feature X?

Maybe, I'd like to experiment a bit first.